by Daniel Brouse

May 25, 2025

In our last R&D and A&R deep dive, “AI, Copyrights, and the Music Industry: A Glimpse into the Future of Intellectual Property,” we explored the growing legal and ethical complexities facing artists who use artificial intelligence in their creative workflows. But as complicated as things were then, the latest development pushes us even further into absurdity: welcome to the era of automated and self-inflicted copyright enforcement.

In that previous case, my digital music distributor had flagged one of my own AI-generated releases, questioning whether I owned the rights. That was odd enough—essentially, my distribution partner was getting into a dispute with my AI assistant over something I had created. But now, the situation has taken a new turn.

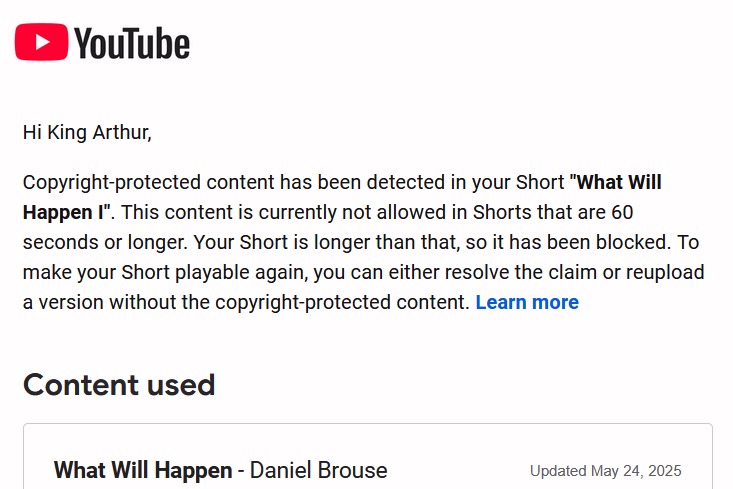

This week, I was notified that my YouTube channel removed one of my own videos—because the background music in the video was “owned” by me through my digital distributor. Yes, you read that correctly: my distributor triggered a Content ID claim against me, on behalf of me.

To restore the video, YouTube now requires that I “submit a dispute to the content owner if you have the rights to the content.” The process continues: “When you dispute a Content ID claim, the person that claimed your video (the claimant) is notified. The claimant has 30 days to respond.”

So now I must formally dispute myself to restore a song that I composed, performed, and uploaded.

The track in question, “What Will Happen Pt. 1,” was created with the help of my AI assistant, trained with my own musical data over the course of a year. That means I now have three virtual agents—my AI assistant, my distributor’s AI system, and YouTube’s Content ID—all fighting each other over a song that I indisputably own.

This is no longer a legal or technical nuance. It’s an existential glitch in the new digital music ecosystem. None of these systems were designed to resolve ownership at this level of nuance or context—and certainly not to understand when they are attacking their own creator.

I never instructed any of these tools to act against me. But the automation is now operating independently, enforcing policies that treat creators as potential infringers, even when there is no infringement. This is more than inconvenient; it’s reputational damage, business disruption, and the beginning of a deeply flawed feedback loop.

What we’re seeing is the early chaos of a system that’s automating enforcement faster than it’s developing the intelligence to understand context. As AI becomes more entrenched in content creation, content distribution, and content policing, the potential for misfires like this will only grow.

It raises urgent questions:

-

Who is ultimately responsible when automated systems attack legitimate creators?

-

What recourse do independent artists have when the enforcement tools are in conflict with each other—and themselves?

-

And most fundamentally, how can we create digital ecosystems where creators aren’t penalized by their own tools?

One thing is clear: we need new protocols for authorship, ownership, and automated enforcement—before the machines get too far ahead of the musicians.